Generative AI: What is it and How Does it Work?

Generative AI is being adopted across many sectors as a way to simplify common tasks such as generating emails, writing code, assisting with design, or improving customer responses.

These AI systems can generate everything from text and code to images and audio. They learn from huge amounts of data. Earlier AI mostly analysed or predicted; this generation is built to create.

Since the release of ChatGPT in late 2022, adoption has picked up at a pace few expected. As of May 2025, the field has matured with several powerful models now in widespread use:

These models aren’t just improving in accuracy they’re becoming multimodal, meaning they can handle a mix of inputs like text, images, and voice. This has expanded how and where they can be used, making them more relevant across real business scenarios.

Across sectors from software to finance to healthcare, even businesses are exploring how to integrate these tools into their workflows. Some are automating routine tasks. Others are building internal copilots. Some are still just experimenting. But the interest is real, and it’s spreading.

This blog gives a grounded overview of generative AI as it stands today. It looks at:

The goal is simple: to help you understand where this technology fits in your work and how to use it with intention.

Generative AI refers to a class of machine learning models that are built to generate new content not just analyse or classify existing data. These models learn patterns, structures, and relationships from massive datasets, and then use that knowledge to produce original outputs that resemble the examples they were trained on. The content could be a sentence, an image, a block of code, a melody, or even a mix of formats like text and visuals.

This is fundamentally different from traditional AI systems, which were mostly built for recognising, sorting, or predicting based on input. Generative models are trained to produce not to retrieve or replicate, but to create something that fits the learned structure of the domain.

Let’s take a look at real example:

Imagine a product manager at a software company. Each time their team launches a new feature, someone needs to write a short announcement for users, a changelog update, a helpdesk article, and maybe even a slide for internal teams.

Generative AI can assist here by drafting the first version of each — based on prior announcements, the feature’s documentation, and the tone the company typically uses. It’s not just copying a previous message. It’s creating something new that fits the context, style, and intent. Then an accurate AI humanizer can smooth out any mechanical phrasing, ensuring the draft sounds as if it came from your team rather than a template. The human team can then review, tweak, and finalise the content in minutes instead of hours.

This is the kind of value generative AI brings: speeding up creative, repetitive, or knowledge-intensive tasks while keeping human oversight in the loop.

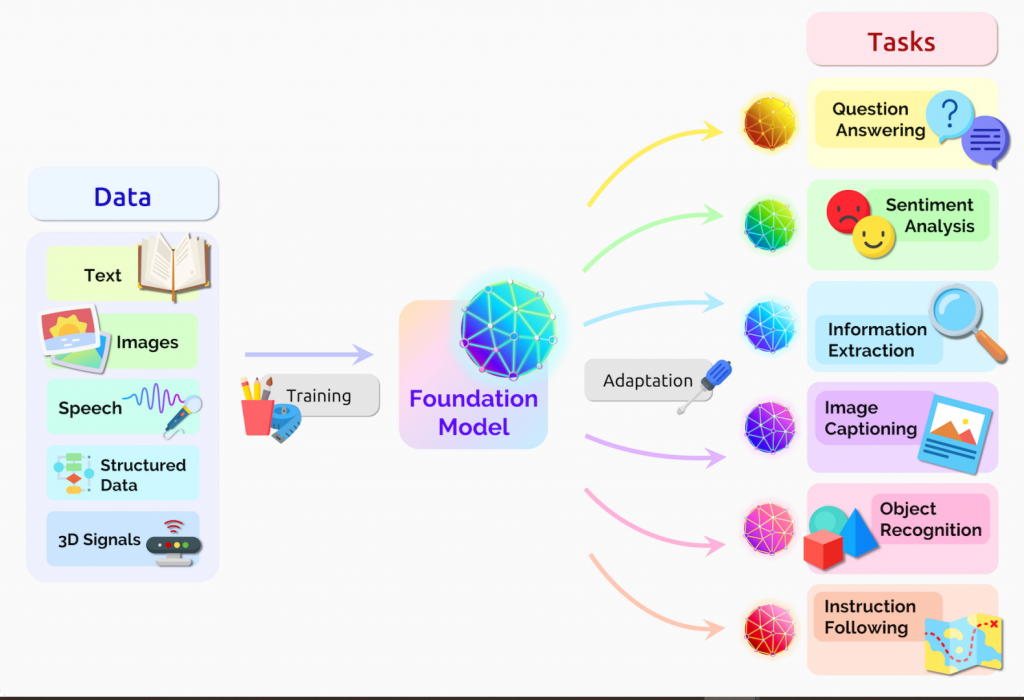

Technically, most generative AI today is built on what’s called a foundation model. These are large-scale neural networks trained on diverse and extensive datasets, covering natural language, code repositories, images, and more. During training, the model learns probabilistic relationships between elements in the data for example, which words tend to follow others, or how visual elements are typically arranged in a photo.

When prompted, the model uses this learned statistical structure to predict what comes next a process that, at scale, enables it to generate content that appears fluent, relevant, and often human-like.

Importantly, the output isn’t copied from the training data. Instead, the model generates new content that reflects patterns it has statistically learned. It’s closer to how a person might summarise a book they’ve read or draw inspiration from multiple ideas to design something new informed by past exposure, but not duplicating it.

Generative AI systems in 2026 rely on advanced deep learning architectures that can generate text, images, audio, video, and more often in response to a single prompt. At the core, these systems are trained on vast datasets and learn to generate new content by predicting what comes next based on patterns they’ve seen before.

Generative AI models are built on various technologies, but three key advancements are powering the latest model:

Most modern models — like GPT-4.1, Claude 3.5, Gemini 2.5, and Llama 4 — are built on the Transformer architecture, originally introduced in 2017. But today’s versions go far beyond early implementations:

These improvements make them far more practical for complex business tasks — from analysing legal contracts to generating software prototypes.

Diffusion models have matured rapidly. They’re used to create high-resolution images and realistic video — not over minutes, but often in seconds. Tools like Sora, Veo 2, and Stable Diffusion 3.5 Turbo use faster sampling methods and support text-or audio-guided generation.

The latest frontier is hybrid models that combine the strengths of multiple architectures:

Examples like LanDiff, HDiffTG, and DeepSeek Janus show how models can now reason, reference, and render:

AI systems in 2026 work by layering advanced techniques — long-context Transformers, fast diffusion models, and retrieval-enhanced reasoning — to generate outputs that are coherent, multimodal, and highly customisable.

They do not just “understand” like us (humans), but they’ve learned enough structure and pattern from billions of examples to generate results that are increasingly useful — and increasingly hard to distinguish from human work.

| Feature | Transformers | Diffusion Models | Generative Adversarial Networks (GANs) |

|---|---|---|---|

| Core Mechanism | Self-attention to weigh importance of sequence elements | Forward (noise addition) and reverse (denoising) iterative processes | Adversarial training of a generator and a discriminator |

| Strengths |

|

|

|

| Weaknesses |

|

|

|

| Typical Applications |

|

|

|

| Computational Characteristics | High for long sequences due to attention mechanism; benefits from parallel processing | High during inference due to iterative denoising; training computationally intensive | Training can be unstable; requires careful balancing of generator and discriminator |

Generative AI isn’t just a research project; it’s actively being applied to solve problems and create opportunities across many areas of our lives and work:

Image Credits: nvidia

When a powerful new technology enters the mainstream, it rarely stays confined to one corner of the economy. It spreads gradually at first, then all at once. That’s exactly what we’re seeing with generative AI in 2026.

This isn’t just another software upgrade. It’s a shift in how work gets done, how products are built, and how society thinks about creativity, knowledge, and responsibility. The effects may start in tech teams, but the ripples are reaching every sector — and every level of decision-making.

For most businesses, the first impact of generative AI has been simple but powerful: routine tasks get done faster. Drafting reports, writing emails, generating code snippets, answering FAQs these jobs are increasingly being handled by AI. But that’s only the surface.

What’s more interesting is what this unlocks:

It’s not just about saving time it’s about changing how value is created. As a result, more leadership teams are now thinking about AI not just as a cost lever, but as a core part of their growth strategy.

The AI wave is also transforming how technology is built.

At the same time, responsibility is no longer an afterthought. New tools are being built to watermark AI-generated content, document training data, and audit model performance for fairness or risk. That shift — building AI with guardrails from day one — is becoming part of the professional standard.

In society, the effects are more complex:

Most jobs have not disappeared, but the nature of work is changing. Professionals in Programing, marketing, customer service, and design now need new skills such as writing prompts for AI, understanding its suggestions, AI to assist in workflow and checking automated results as part of their regular responsibilities.

Upskilling and access are becoming central conversations — because if only a few groups know how to use these tools, inequality deepens. Making generative AI more accessible both technically and educationally — is a global challenge that still needs attention.

At the same time, trust in content is under pressure. AI can now generate deep fake images, voice clips, and video content with near-perfect realism. This has serious implications for misinformation, election interference, and public discourse.

New solutions are starting to appear to detect AI-generated content and clearer labeling. But these efforts will only work if we (humans) are generally aware that what they’re seeing could be created by AI

Another critical issue: bias and fairness. AI models reflect the data they’re trained on. If that data contains bias, the model can unknowingly reinforce it — in hiring recommendations, content suggestions, even legal or financial decisions. Active monitoring, diverse datasets, and transparent audit processes are no longer optional. They’re essential.

Also, there are the unresolved legal and ethical questions:

These are active debates in boardrooms, courtrooms, and policy circles — and the rules are still being written.

If you’re planning to build a generative AI chatbot, here’s how you can go from idea to live chatbot — powered by models like GPT-4o or Claude — without writing a single line of code.

1. Sign Up

Go to YourGPT Chatbot and create an account.

Once you’re in, you can start building a generative AI chatbot for your website, product, or internal system — all in a no-code setup.

2. Train with Your Data

Upload your content — PDFs, URLs, FAQs, Notion docs, Google Drive, or plain text. The chatbot learns from this data to generate accurate, context-aware responses based on user queries.

3. Customise Behaviour and Personality

Set your chatbot’s appearance, add your bot persona and pick a model for your Usecase. You can also set restrictions on what it should avoid and much more.

4. Integrate Anywhere

Embed the chatbot on your site using a script code snippet. You can also connect it to WhatsApp, Instagram, Slack, or any platform your users are already on.

5. Go Live

You’re done. Your generative AI chatbot is live ready to chat, respond, and take real action with your users in real-time

Generative AI refers to models that create new content—text, images, audio, or video—based on patterns they’ve learned from large datasets. It’s different from traditional AI, which usually focuses on recognising or classifying data.

These models predict what comes next in a sequence based on training data. When you give a prompt, they generate content by using patterns they’ve statistically learned. Transformers, diffusion models, and retrieval-based methods are often used.

ChatGPT helps draft emails. Midjourney creates images. GitHub Copilot assists developers. Businesses use generative AI for marketing copy, product visuals, code generation, and customer responses.

They’re different. Traditional AI is best for classification, forecasting, and analysis. Generative AI is used when you need new content, such as text, code, or visuals. Many businesses use both depending on the task.

Popular models include GPT-4o (OpenAI), Claude 3.7 (Anthropic), Gemini 2.5 Pro (Google), and LLaMA 4 (Meta). These support multimodal input and are widely used in business tools and platforms.

Companies are automating documentation, generating product content, creating marketing assets, building chat assistants, and improving internal workflows using AI copilots and content generation tools.

Key risks include biased output, misinformation, misuse (e.g., deepfakes), and data privacy concerns. Responsible use includes human oversight, content filtering, and regular model evaluation.

Yes. Open-source models like LLaMA 4 or DeepSeek can run on local infrastructure. This allows for more control but requires strong GPU resources and technical know-how.

It depends on your use case. Text tasks → GPT-4o or Claude. Images → Stable Diffusion. Video → Sora. Consider speed, cost, deployment options, and input/output types when choosing.

Yes, copyright and legal ownership of AI-generated content is still a grey area. For example, if you use a model like ChatGPT to generate content, questions arise around authorship, rights, and liability. Some jurisdictions may not consider AI-generated work as copyrightable unless a human makes meaningful contributions.

For a detailed look at this, read our full blog on copyright challenges with AI.

Generative AI has moved beyond experimentation. It is now influencing how teams communicate, build, and solve problems across nearly every industry.

But real impact doesn’t come from simply adding AI tools. It comes from using them with purpose identifying where they actually improve outcomes, reduce friction, or unlock new possibilities.

For most organisations, the opportunity lies in practical adoption:

This technology is powerful, but it’s not automatic. It requires clear goals, thoughtful oversight, and ongoing evaluation. The right setup can save time, cut costs, and improve consistency. The wrong one can introduce risk, bias, or noise.

As generative AI continues to evolve, the key question isn’t “Should we use it?” but rather “Where does it make sense, and how do we stay in control?”

That’s where the value is in using AI to support real work, not replace it. Keep the humans in charge. Use the tools to go faster and think better. And focus on outcomes that actually move your business forward.

Join thousands of businesses using AI Chatbot to automate support and boost engagement.

No credit card required • Full access • Cancel anytime

Access to clear, accurate information now sits at the center of customer experience and internal operations. People search first when setting up products, reviewing policies, or resolving issues, making structured knowledge essential for fast, consistent answers. A knowledge base organizes repeatable information such as guides, workflows, documentation, and policies into a searchable system that supports […]

TL;DR Agent mining shifts AI from answering questions to executing real work across systems through controlled, repeatable workflows with verification. By automating repetitive operations with guardrails and observability, agents reduce friction, improve consistency, and let humans focus on decisions and edge cases. For a decade, AI was mostly framed as something that answers. It explains, […]

Say “AI” and most people still think ChatGPT. A chat interface where you type a question and get an answer back. Fast, helpful, sometimes impressive. Three years after ChatGPT went viral, surveys show that’s still how most people think about AI. For many, ChatGPT isn’t just an example of AI. It is AI. The entire […]

Hotel guests don’t wait for business hours to ask questions. They message whenever it’s convenient for them, which is usually when your staff aren’t available to respond. If they don’t hear back quickly, they book elsewhere. The requests themselves are rarely complicated. Guests want to know about availability, check-in procedures, whether pets are allowed, or […]

TL;DR Lead generation in 2026 works best with a multi-channel system, not isolated tactics. This blog covers 18 proven strategies and 12 optimizations used by top teams. You will learn how to combine AI, outbound, content, and community to build predictable lead flow at any scale. Lead generation is the lifeblood of every business. Without […]

In 2026, “How many AI agents work at your company?” is not a thought experiment. It is a practical question about capacity. About how much work gets done without adding headcount, delays, or handoffs. Most teams have already discovered the limits of chatbots. They answer questions, then stop. The real opportunity is in AI agents […]