What is a Vector Database & How it Works?

If you’ve ever wondered how YouTube plays the exact video or music you want to watch-listen, or how a chatbot instantly finds the right answer—it’s because of something called a vector database.

You’ve probably heard terms like “embeddings,” “semantic search,” or “vector stores” in the recent years. They can sound technical, but the basic idea is simple. And if you’re working with AI in any form, this is something worth understanding.

In this blog, we’ll explain what a vector database is, how it works, and and why it matters for real-world use cases.

A vector database is built to store and search data using vectors—numerical representations of things like text, images, or audio.

Think of a vector as a way to capture the meaning of data in numbers. Instead of matching exact words, a vector database finds results based on context and similarity.

Here’s the difference:

The key idea: vector databases don’t just match words—they match concepts.

To understand how a vector database works, you first need to understand vectors and embeddings.

Everything starts with embeddings—numerical vectors generated using embedding models trained to capture semantic meaning.

These models take raw inputs like text or images and turn them into vectors (lists of numbers) that represent the core idea behind the data.

For example:

| Original Data | Vector Representation |

|---|---|

| “Affordable Laptop” | [0.23, 0.91, 0.34, …] |

| “Budget-friendly Computer” | [0.24, 0.89, 0.33, …] |

| “Expensive Sports Car” | [-0.77, -0.21, 0.12, …] |

The first two vectors are nearly identical because their meanings are similar.

The third one is completely different—it represents a different concept.

Once the data is converted into embeddings, the vector database stores and indexes them for fast retrieval. It uses advanced indexing methods like:

These methods make it possible to search millions of vectors quickly and accurately.

When a user sends a query, it’s also converted into an embedding. The database then compares this query vector to the stored ones and returns results based on similarity—not keyword match.

For example, if someone searches “How to reset my password?”, the system might return:

Even though the words are different, the intent is the same. This is called semantic search—retrieving information based on meaning rather than exact phrasing.

Embedding models convert raw data—text, images, or other formats—into vectors that capture semantic meaning. These vectors are called embeddings, and they’re what vector databases store and search.

Some commonly used embedding models:

Semantic search returns results based on meaning, not just keywords. Instead of looking for exact phrases, it understands the intent behind a query and retrieves the most relevant results—even if the wording is different.

For example:

Query: “How do I recover my login?”

Result: “Forgot password” → matched because the meaning is aligned.

This leads to more accurate, helpful search experiences—especially in AI-driven applications like chatbots, help centres, and recommendation systems.

ANN algorithms are what make vector search fast and scalable. They help identify the most similar embeddings from millions of records—without scanning every item.

Popular ANN algorithms include:

Most businesses today deal with unstructured data—text, images, videos, customer queries. But traditional databases aren’t built for that.

That’s where vector databases come in. Here’s how they actually help your business:

People often make typos or don’t know the exact keywords.

Vector databases understand the meaning behind a search, not just the words. So even if someone types it wrong or uses a related term, they still get the right result.

Example: Typing “goldeen retrever” will still show “golden retriever” results.

AI systems often make things up when they don’t have real data to back their answers.

Vector databases help by letting the AI search through your actual documents, knowledge base, or product data—so the answers are based on facts, not guesses.

Results:

Most recommendation engines use simple filters. Vector databases look at user behaviour in more detail—what they’ve viewed, liked, or bought—and find other similar items, even if they aren’t from the same category.

Example: A user who watches sports documentaries may get recommendations for motivational content or behind-the-scenes interviews—things others with similar interests liked.

Users now search using photos, voice, or by typing. Vector databases support all of these in one system.

Example: A user can describe a product (“red sofa with wooden legs”) and get matching images—even if the product titles don’t exactly match the words.

| Industry | Use Case | Real-World Impact |

|---|---|---|

| 🛒 E-commerce | Personalised product ranking based on vector similarity with past behavior | Users get what they’re likely to buy — fewer clicks, more revenue |

| 💬 Customer Support | RAG-based chatbot retrieves answers from large knowledge bases | Good system drops human escalations by 80% |

| 🎬 Media & Streaming | Suggest similar songs, videos, or news articles using vector matching | Boosts engagement and time-on-app without manual tagging |

| 💼 HR & IT | Semantic document search across resumes, policies, and tickets | Reduces time to resolution and improves internal knowledge access |

| 🏦 Finance | Compare live transactions against known fraud patterns via vector search | Flags risks in real time, reducing chargebacks and losses |

| ⚖️ LegalTech | Semantic case law search based on vectorized legal text | Find similar precedents instantly — without keyword guesswork |

| 🎓 EdTech | Match students with relevant learning content using embedding search | Improves comprehension and retention via personalised material |

| 🚛 Logistics | Detect similar routes or shipment exceptions using time-series vectors | Reduces delays and improves predictive accuracy of delivery ETAs |

| 🎮 Gaming | Recommend levels or challenges similar to user behavior | Keeps players engaged longer by offering relevant content |

| 🛡️ Security | Detect insider threats by comparing user behavior vectors | Identifies abnormal activity early — before damage is done |

A vector database stores data as embeddings (vectors) instead of traditional rows and columns. It helps find similar data by using nearest-neighbour search instead of exact keyword matching — ideal for AI apps, search engines, and recommendation systems.

Embeddings are numerical representations of text, images, or audio. They help capture meaning or similarity. For example, in chatbots or search, embeddings let you match questions with relevant answers even if the words aren’t exactly the same.

Most vector DBs support popular models like OpenAI, Cohere, BERT, and SentenceTransformers. You can also use custom embedding models depending on your use case and the DB’s API support.

In Retrieval-Augmented Generation (RAG), vector DBs store knowledge in embeddings. When a user asks a question, relevant data is fetched using vector search and passed to an LLM like GPT-4 for better responses.

Modern vector DBs like Pinecone, Weaviate, and Milvus are built to handle millions of embeddings with fast search and horizontal scaling. They support indexing strategies like HNSW and IVF for speed.

Common indexing methods include HNSW (Hierarchical Navigable Small World), IVF (Inverted File), PQ (Product Quantization), and Flat (brute-force). Each has a tradeoff between speed, accuracy, and memory usage.

Yes. Open-source options like FAISS, Milvus, Qdrant, and Weaviate allow you to run vector DBs on your own servers or cloud. This gives you full control over infrastructure and data.

Yes. Most vector DBs let you attach metadata (like tags, categories, timestamps) to each vector. You can then filter results based on this metadata during search.

Start by considering your needs: managed or self-hosted, scale, integration with your LLM stack, metadata filtering, indexing options, and pricing. Pinecone (SaaS), Weaviate (hybrid), and FAISS (local) are good starting points.

A vector database is built for one thing: finding results based on meaning, not just matching keywords. That’s a major advantage when you’re working with unstructured data—text, images, audio—where traditional search often falls short.

It works by storing vector representations of data generated by embedding models. When a query comes in, it’s converted into a vector too, and the system finds the closest matches based on similarity—not exact phrasing. This allows AI systems to return more relevant results, even when the user’s input is imprecise or phrased differently.

In practical terms, this means fewer irrelevant search results, better response accuracy in chatbots, and more personalised recommendations. It doesn’t replace keyword search entirely, but it fills the gaps where traditional methods can’t deliver.

As AI tools become more context-aware, vector databases are becoming essential—not because they’re new, but because they work better for the way people actually search and interact today.

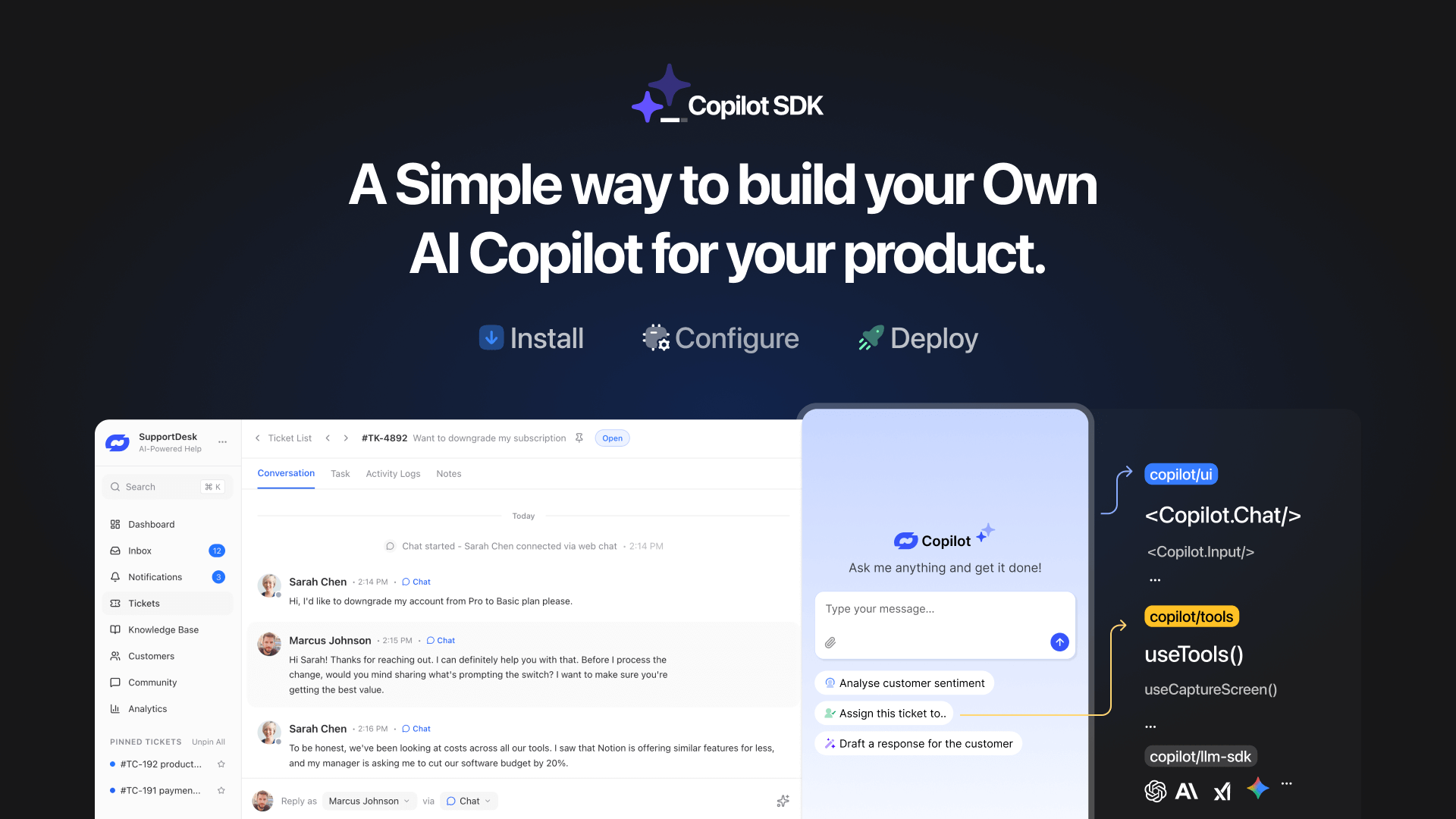

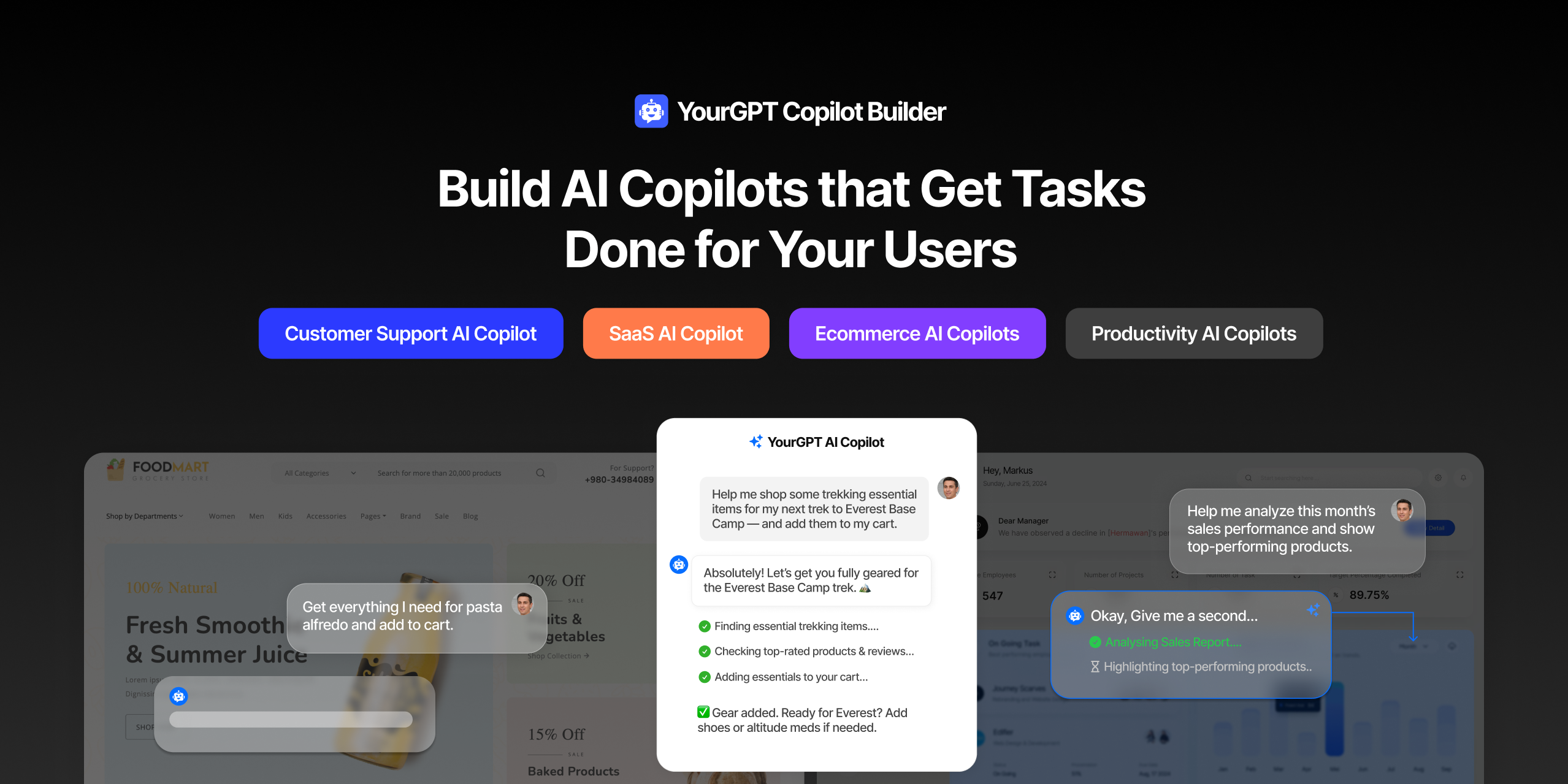

TL;DR YourGPT Copilot SDK is an open-source SDK for building AI agents that understand application state and can take real actions inside your product. Instead of isolated chat widgets, these agents are connected to your product, understand what users are doing, and have full context. This allows teams to build AI that executes tasks directly […]

Businesses today expect AI to do more than answer questions. They need systems that understand context, act on information, and support real workflows across customer support, sales, and operations. YourGPT is built as an advanced AI system that reasons through tasks and keeps context connected across every interaction. This intelligence sits inside a complete platform […]

AI can help you finds products but doesn’t add them to cart. It locates account settings but doesn’t update them. It checks appointment availability but doesn’t book the slot. It answers questions about data but doesn’t run the query. Every time, the same pattern: it tells you what to do, then waits for you to […]

GPT-driven Telegram bots are gaining popularity as Telegram itself has 950 million users worldwide. These AI Telegram bots allows you to create custom bots that can automate common tasks and improve user interactions. This guide will show you how to create a Telegram bot using GPT-based models. You’ll learn how to integrate GPT into your […]

TL;DR The 10 best no-code AI chatbot builders for 2026 help businesses launch quickly and scale without developers. YourGPT ranks first for automation, multilingual chat, and integrations. CustomGPT and Chatbase are ideal for data-trained bots, while SiteGPT and ChatSimple focus on easy setup. Other options like Dante AI, DocsBot, and Botsonic specialize in workflows and […]

GPT Chatbot for Webflow: The Key to Exceptional Customer Service Providing great customer service is essential for any business, but managing a high volume of inquiries can be a challenge.If you use Webflow, integrating a webflow chatgpt can simplify this process. This AI-powered webflow chatbot offers consistent, personalised responses to customer queries, helping you manage […]