Fine-tuning GPT-3.5 Turbo: A Deep Dive into the World of Fine-Tuning

The world of AI is ever-evolving, and OpenAI is at the forefront of this revolution. With the recent announcement of fine-tuning availability for GPT-3.5 Turbo and the upcoming support for GPT-4, developers are now empowered to tailor models to their specific needs. This article delves into the significance, use cases, and steps to leverage this fine-tuning capability.

Fine-tuning isn’t just a minor update; it’s a game-changer. Early tests have demonstrated that a fine-tuned GPT-3.5 Turbo can rival, and sometimes even surpass, the base GPT-4 capabilities in specialized tasks. The beauty of this is that while developers harness the power of customization, they retain full ownership of their data, ensuring privacy and security.

Why Fine-Tune GPT-3.5 Turbo?

Since the launch of GPT-3.5 Turbo, there’s been a growing demand for customization. Here’s why:

Moreover, fine-tuning allows businesses to reduce their prompt size by up to 90%, leading to faster API calls and reduced costs.

The Power of Combination

Fine-tuning, when combined with techniques like prompt engineering, information retrieval, and function calling, can elevate the model’s performance to unprecedented levels. And with support for function calling and gpt-3.5-turbo-16k on the horizon, the possibilities are endless.

Getting Started with Fine-Tuning

Step 1: Prepare your data. For instance:

{ "messages": [ { "role": "system", "content": "You are an assistant that occasionally misspells words" }, { "role": "user", "content": "Tell me a story." }, { "role": "assistant", "content": "One day a student went to schoool." } ] }

Step 2: Upload your files using the provided curl command.

Step 3: Initiate a fine-tuning job with another curl command.

Understanding the Costs

Fine-tuning is an investment, and it’s essential to understand its cost structure:

To give a practical example, a gpt-3.5-turbo fine-tuning job with a 100,000-token training file trained over 3 epochs would cost approximately $2.40.

Conclusion

The introduction of fine-tuning for GPT-3.5 Turbo marks a significant milestone in the journey of AI customization. Developers and businesses can now harness the power of AI, molding it to fit their unique needs and use cases. The future of AI is not just about intelligence; it’s about adaptability and customization. And with OpenAI’s latest update, that future is now.

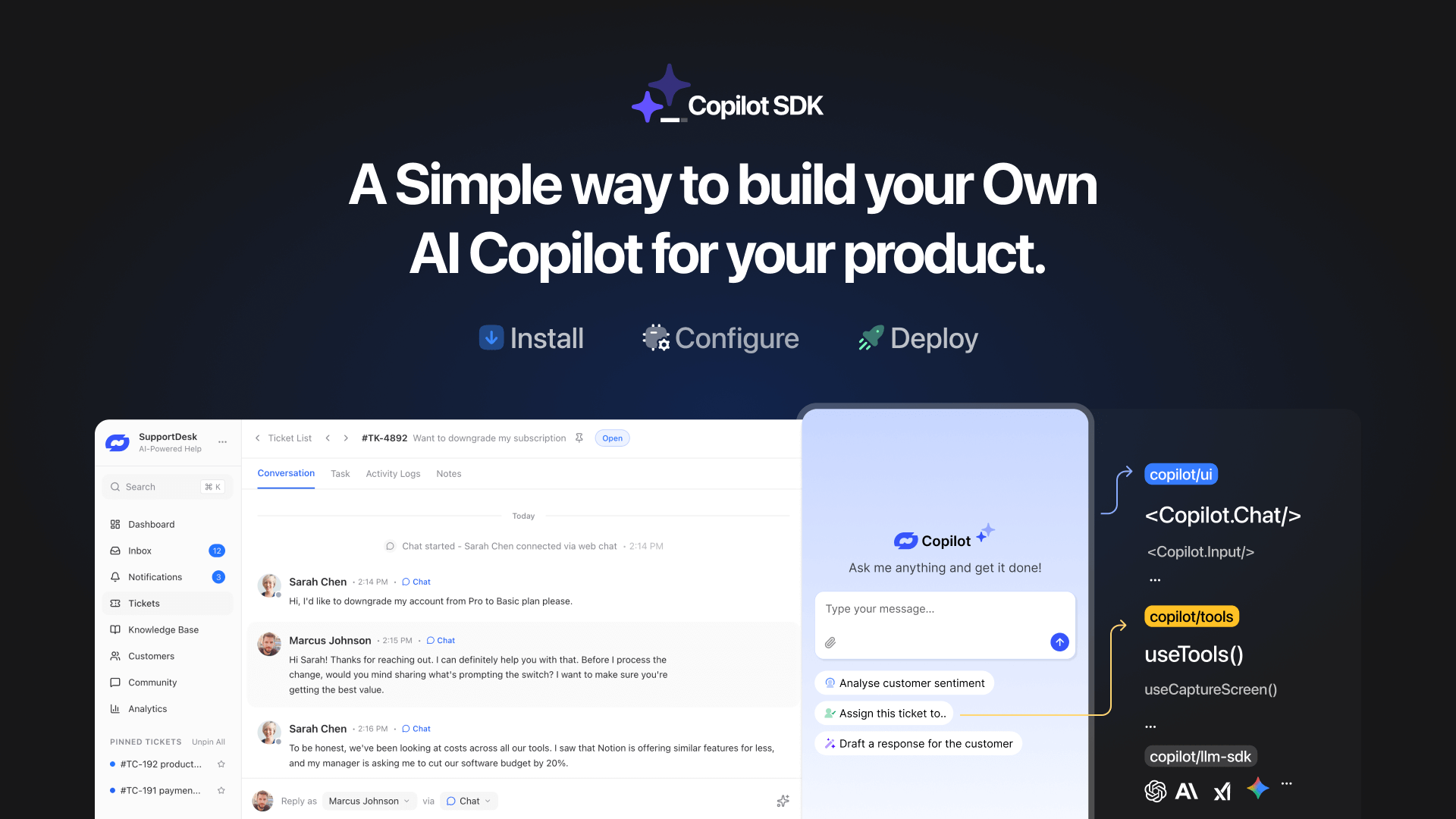

TL;DR YourGPT Copilot SDK is an open-source SDK for building AI agents that understand application state and can take real actions inside your product. Instead of isolated chat widgets, these agents are connected to your product, understand what users are doing, and have full context. This allows teams to build AI that executes tasks directly […]

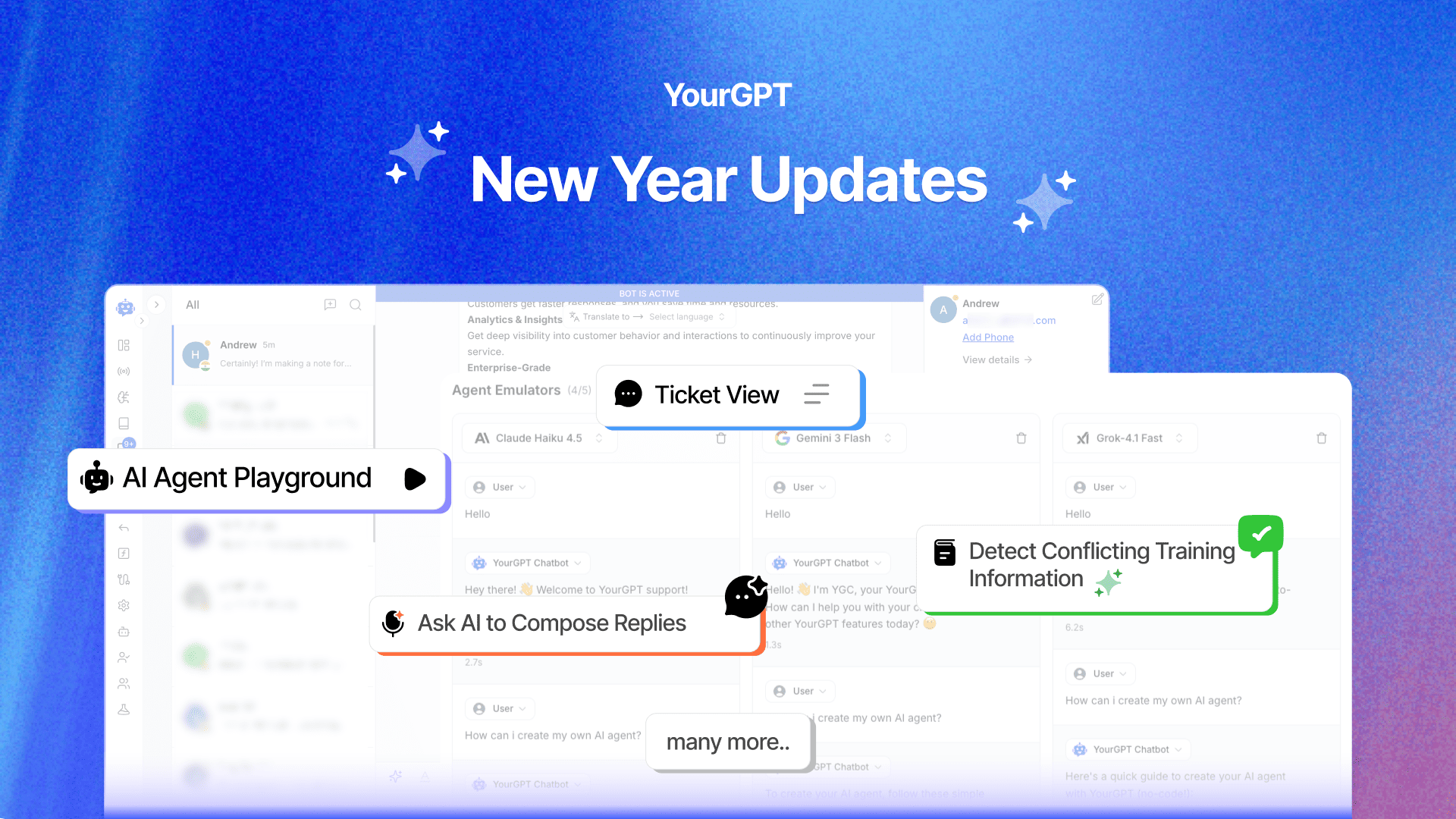

Happy New Year! We hope 2026 brings you closer to everything you’re working toward. Throughout 2025, you’ve seen the platform evolve. We shipped the AI Copilot Builder so your AI could execute actions on both frontend and backend, not just answer questions. We added AI assistance inside Studio to help you generate workflows without starting […]

Grok 4 is xAI’s most advanced large language model, representing a step change from Grok 3. With a 130K+ context window, built-in coding support, and multimodal capabilities, Grok 4 is designed for users who demand both reasoning and performance. If you’re wondering what Grok 4 offers, how it differs from previous versions, and how you […]

OpenAI officially launched GPT-5 on August 7, 2025 during a livestream event, marking one of the most significant AI releases since GPT-4. This unified system combines advanced reasoning capabilities with multimodal processing and introduces a companion family of open-weight models called GPT-OSS. If you are evaluating GPT-5 for your business, comparing it to GPT-4.1, or […]

In 2025, artificial intelligence is a core driver of business growth. Leading companies are using AI to power customer support, automate content, improving operations, and much more. But success with AI doesn’t come from picking the most popular model. It comes from selecting the option that best aligns your business goals and needs. Today, the […]

You’ve seen it on X, heard it on podcasts, maybe even scrolled past a LinkedIn post calling it the future—“Vibe Marketing.” Yes, the term is everywhere. But beneath the noise, there’s a real shift happening. Vibe Marketing is how today’s AI-native teams run fast, test more, and get results without relying on bloated processes or […]