OpenAI Announcement: GPT Vision a Multimodal Innovation

OpenAI is making waves in the field of artificial intelligence once more, this time by taking a significant step towards democratising visual AI technology. ChatGPT Pro users can now communicate using images thanks to the recent public release of GPTvision, a game-changing innovation. This exciting advancement represents a significant step forward in the evolution of AI-powered interactions. This announcement, however, is not entirely new; OpenAI introduced this remarkable feature six months ago, on March 15, 2023. Today, we are going over this exciting GPT Vision update, looking at how this technology is changing the way we interact with AI and visuals.

Multi-modal AI refers to artificial intelligence systems that can understand, interpret, and process information from a variety of data inputs, or “modalities.” Text, images, audio, video, and other sensory data are examples of modalities. The key aspect of multi-modal AI is its ability to integrate and synthesise information from these diverse sources, much like how humans perceive and understand the world using multiple senses.

GPTvision is not your typical visual artificial intelligence technology. Unlike traditional models that only focus on images, GPTvision is multimodal, which means it can understand and generate text and images simultaneously. This feature enables a more natural and interactive user experience.

OpenAI adds voice and image capabilities to ChatGPT with GPTvision, making interactions more natural and engaging. Users can now have voice conversations and share images, expanding the horizons of what can be achieved with AI. Whether it’s discussing landmarks, planning meals, or getting homework help using images, GPTvision empowers users in diverse ways.

OpenAI is taking a measured approach to deploying GPTvision, starting with voice on iOS and Android for Plus and Enterprise users. This gradual rollout ensures a high level of quality and user satisfaction. It allows OpenAI to gather valuable feedback and make refinements before expanding to other platforms.

OpenAI has big plans for GPTvision. While the initial rollout is limited to Plus and Enterprise users, access to these exciting features will be expanded in the future. OpenAI envisions a world where everyone can benefit from the capabilities of GPTvision, further democratising AI technology.

The release of GPTV by OpenAI represents a significant advancement in artificial intelligence. By allowing ChatGPT Pro users to communicate using photos, this invention transforms AI-driven interactions. Although public access was announced today, OpenAI already revealed this feature on March 15, 2023. It has already begun to change how we interact with technology. GPTvision is a standout, cutting-edge multimodal visual AI solution.

OpenAI signals the future of GPTVision user interactions. When speech and visual capabilities are added, interactions become more organic and interesting. Users can now converse in speech and share photos, opening up a whole new world of possibilities for everything from discussing landmarks to making meal plans to asking for homework help. OpenAI introduces GPTvision in a gradual manner. It ensures quality and consumer satisfaction by launching voice features first on iOS and Android for Plus and Enterprise users. Furthermore, it allows OpenAI to collect valuable data for future improvements before expanding to other platforms.

OpenAI’s future plans include making GPTvision’s capabilities available to a broader audience. While the initial focus is on Plus and Enterprise users, the ultimate goal is to democratise AI technology and make GPTvision available to all users. With this advancement, we can anticipate a bright future in which AI and visual communication will be combined to improve our daily lives.

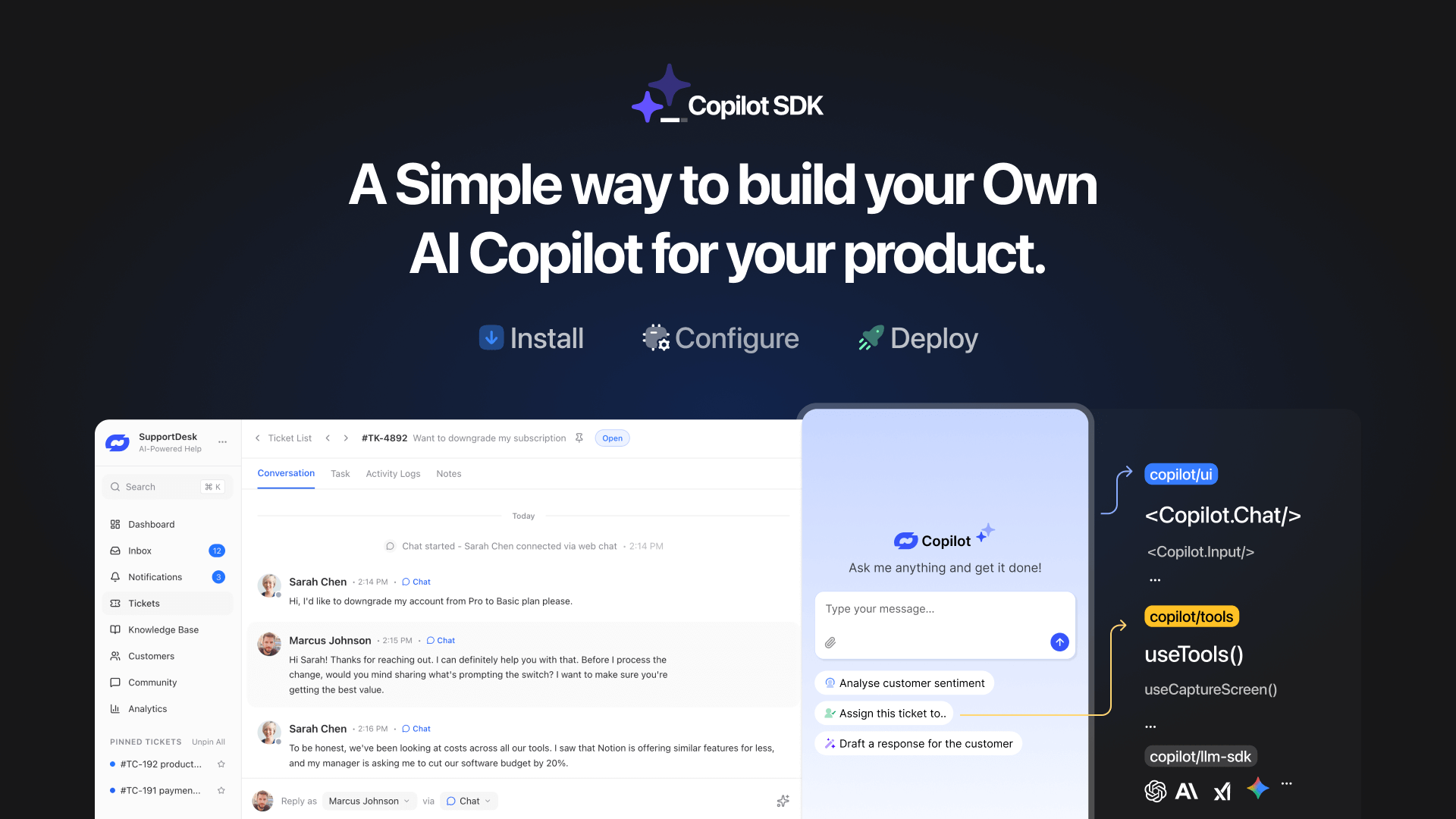

TL;DR YourGPT Copilot SDK is an open-source SDK for building AI agents that understand application state and can take real actions inside your product. Instead of isolated chat widgets, these agents are connected to your product, understand what users are doing, and have full context. This allows teams to build AI that executes tasks directly […]

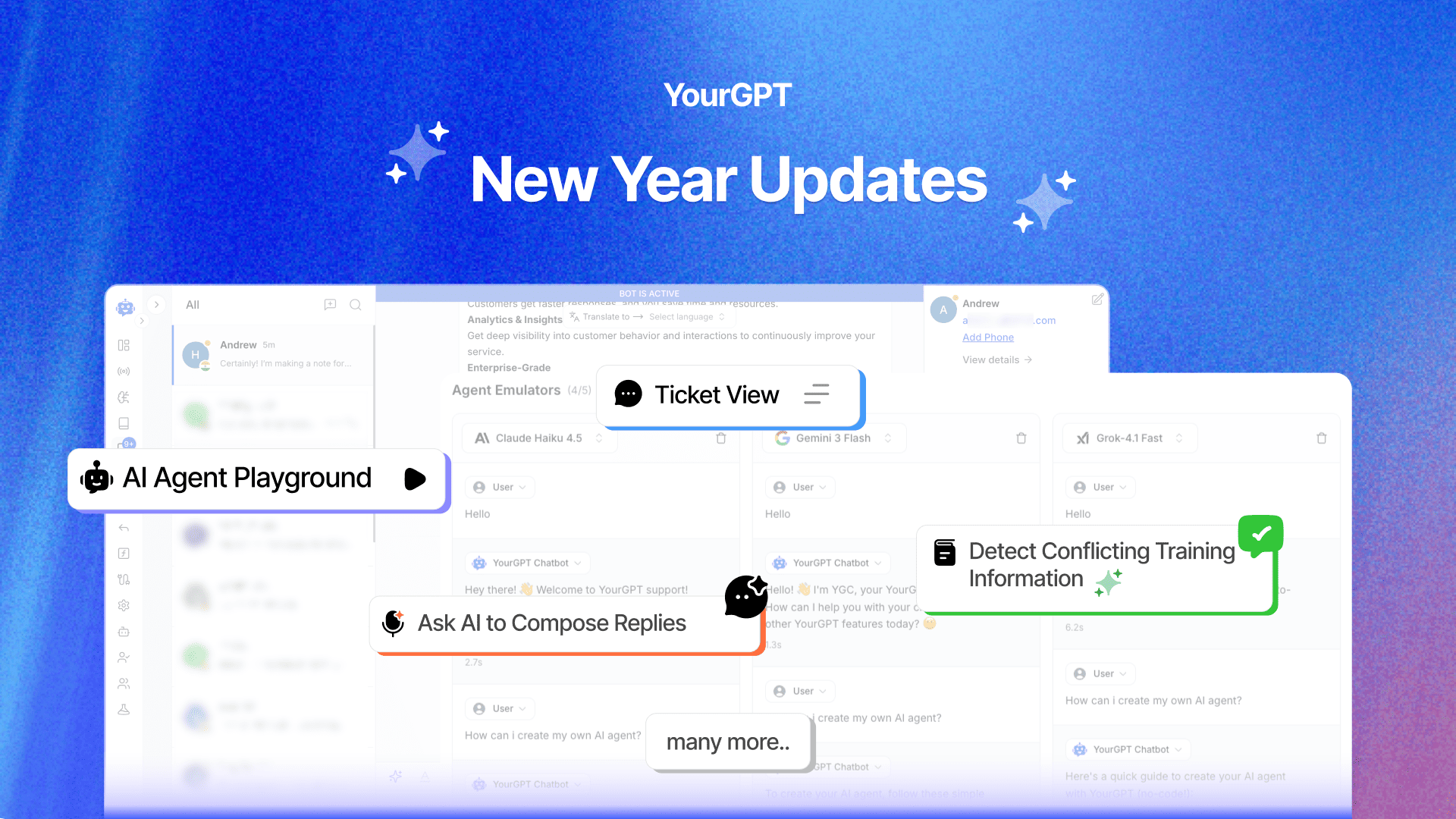

Happy New Year! We hope 2026 brings you closer to everything you’re working toward. Throughout 2025, you’ve seen the platform evolve. We shipped the AI Copilot Builder so your AI could execute actions on both frontend and backend, not just answer questions. We added AI assistance inside Studio to help you generate workflows without starting […]

Grok 4 is xAI’s most advanced large language model, representing a step change from Grok 3. With a 130K+ context window, built-in coding support, and multimodal capabilities, Grok 4 is designed for users who demand both reasoning and performance. If you’re wondering what Grok 4 offers, how it differs from previous versions, and how you […]

OpenAI officially launched GPT-5 on August 7, 2025 during a livestream event, marking one of the most significant AI releases since GPT-4. This unified system combines advanced reasoning capabilities with multimodal processing and introduces a companion family of open-weight models called GPT-OSS. If you are evaluating GPT-5 for your business, comparing it to GPT-4.1, or […]

In 2025, artificial intelligence is a core driver of business growth. Leading companies are using AI to power customer support, automate content, improving operations, and much more. But success with AI doesn’t come from picking the most popular model. It comes from selecting the option that best aligns your business goals and needs. Today, the […]

You’ve seen it on X, heard it on podcasts, maybe even scrolled past a LinkedIn post calling it the future—“Vibe Marketing.” Yes, the term is everywhere. But beneath the noise, there’s a real shift happening. Vibe Marketing is how today’s AI-native teams run fast, test more, and get results without relying on bloated processes or […]