This week in AI | Week 2

This week in the field of artificial intelligence, Google revealed Gemma, HuggingFace introduction to Cosmopedia. Additionally, Amazon announced that open-source, high-performing Mistral models will soon be accessible on Amazon Bedrock. By bringing such powerful AI to more users via cloud-based services, new applications may emerge. These advancements show how quickly AI is developing; in certain areas, systems are already capable of doing some tasks that humans could not perform. As a result, the frontiers of what is possible are being redefined at a rapid rate. There are exciting times ahead as the AI community is coming up with new and seemingly unimaginable achievements every week.

Google introduced Gemma, marking a pivotal moment in the democratisation of AI technology. Derived from the same technological lineage as the acclaimed Gemini models, Gemma is designed to foster responsible AI development, offering a suite of lightweight, state-of-the-art open models that promise to revolutionise how developers and researchers build and innovate.

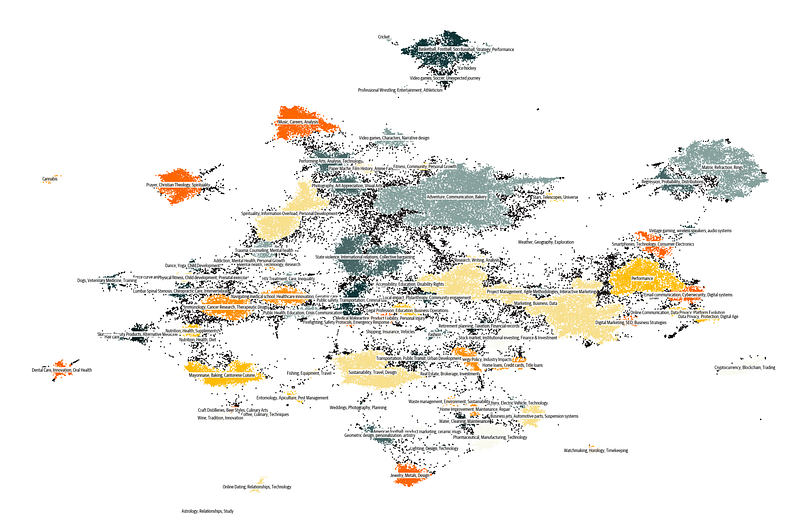

Hugging Face has recently unveiled Cosmopedia v0.1, the largest open synthetic dataset, consisting of over 30 million samples generated by Mixtral 7b. This dataset, comprising various content types such as textbooks, blog posts, stories, and WikiHow articles, totals an impressive 25 billion tokens.

Cosmopedia v0.1 stands out as a gigantic attempt to assemble world knowledge by mapping data from web datasets such as RefinedWeb and RedPajama. It is divided into eight distinct splits, each created from a different seed sample, and covers a wide range of topics, appealing to a variety of interests and inclinations.

Hugging Face includes code snippets for loading certain dataset splits to make it easier to use. A smaller subset, Cosmopedia-100k, is also available for individuals looking for a more easily maintained dataset. The development of Cosmo-1B, a larger model trained on Cosmopedia, demonstrates the dataset’s scalability and adaptability.

Cosmopedia was designed with the goal of maximising diversity while minimising redundancy. Through targeted prompt styles and audiences, continual prompt refining, and the use of MinHash deduplication algorithms, the dataset achieves a remarkable breadth of coverage and originality in content.

In another exciting development, Mistral AI, a France-based AI company known for its fast and secure large language models (LLMs), is set to make its models available on Amazon Bedrock. Mistral AI will join as the 7th foundation model provider on Amazon Bedrock, alongside leading AI companies.

Mistral AI models are set to be publicly available on Amazon Bedrock soon, promising to provide developers and researchers with more tools to innovate and scale their generative AI applications.

This week’s developments highlight a huge drive for more open, accessible, and responsible AI technologies. The AI community continues to push for inclusive, diverse, and ethically grounded innovation, with Google’s Gemma offering cutting-edge models for responsible development, Hugging Face’s Cosmopedia expanding the scope of synthetic data research, and Mistral AI’s strategic move to Amazon Bedrock.

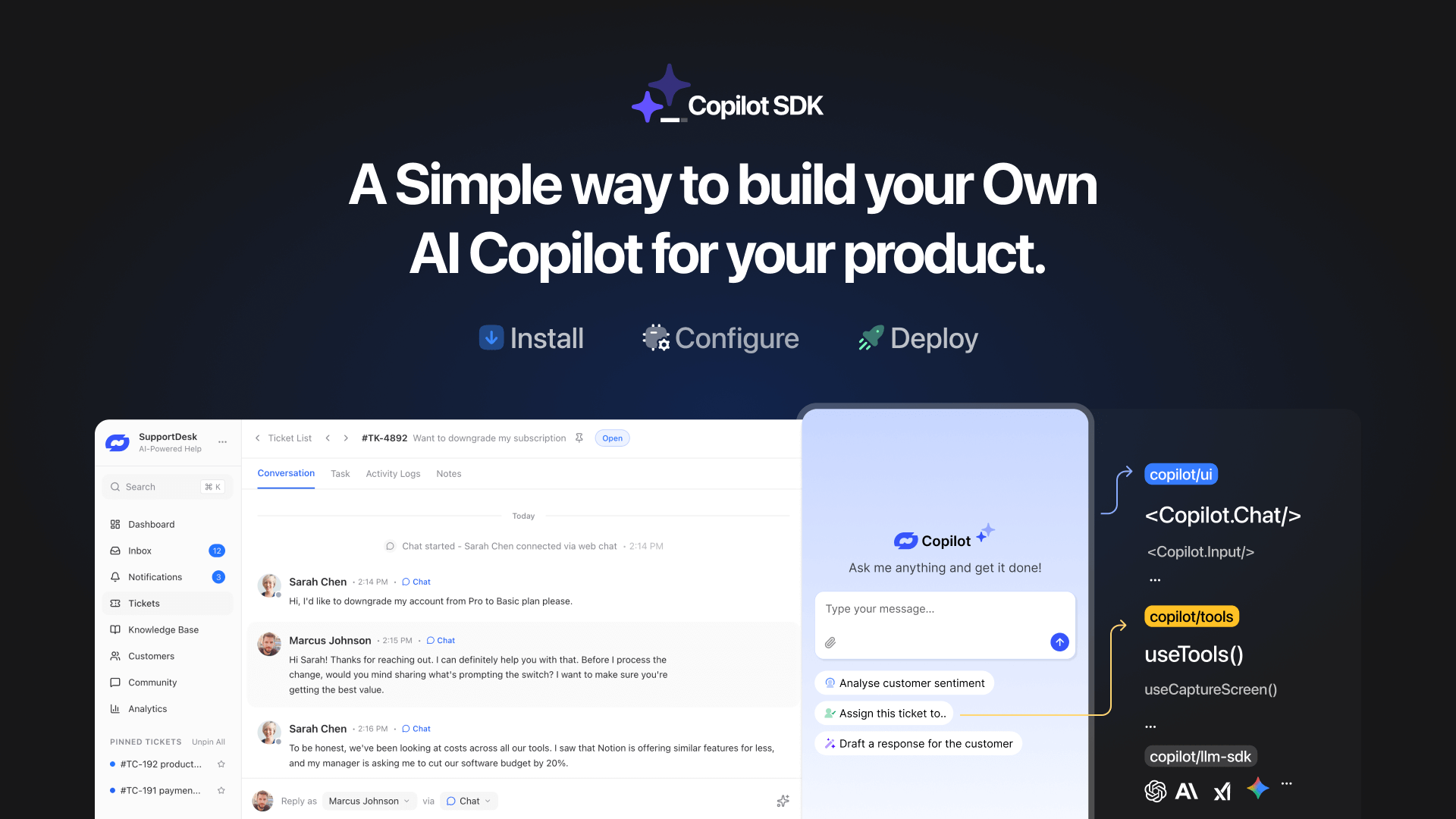

TL;DR YourGPT Copilot SDK is an open-source SDK for building AI agents that understand application state and can take real actions inside your product. Instead of isolated chat widgets, these agents are connected to your product, understand what users are doing, and have full context. This allows teams to build AI that executes tasks directly […]

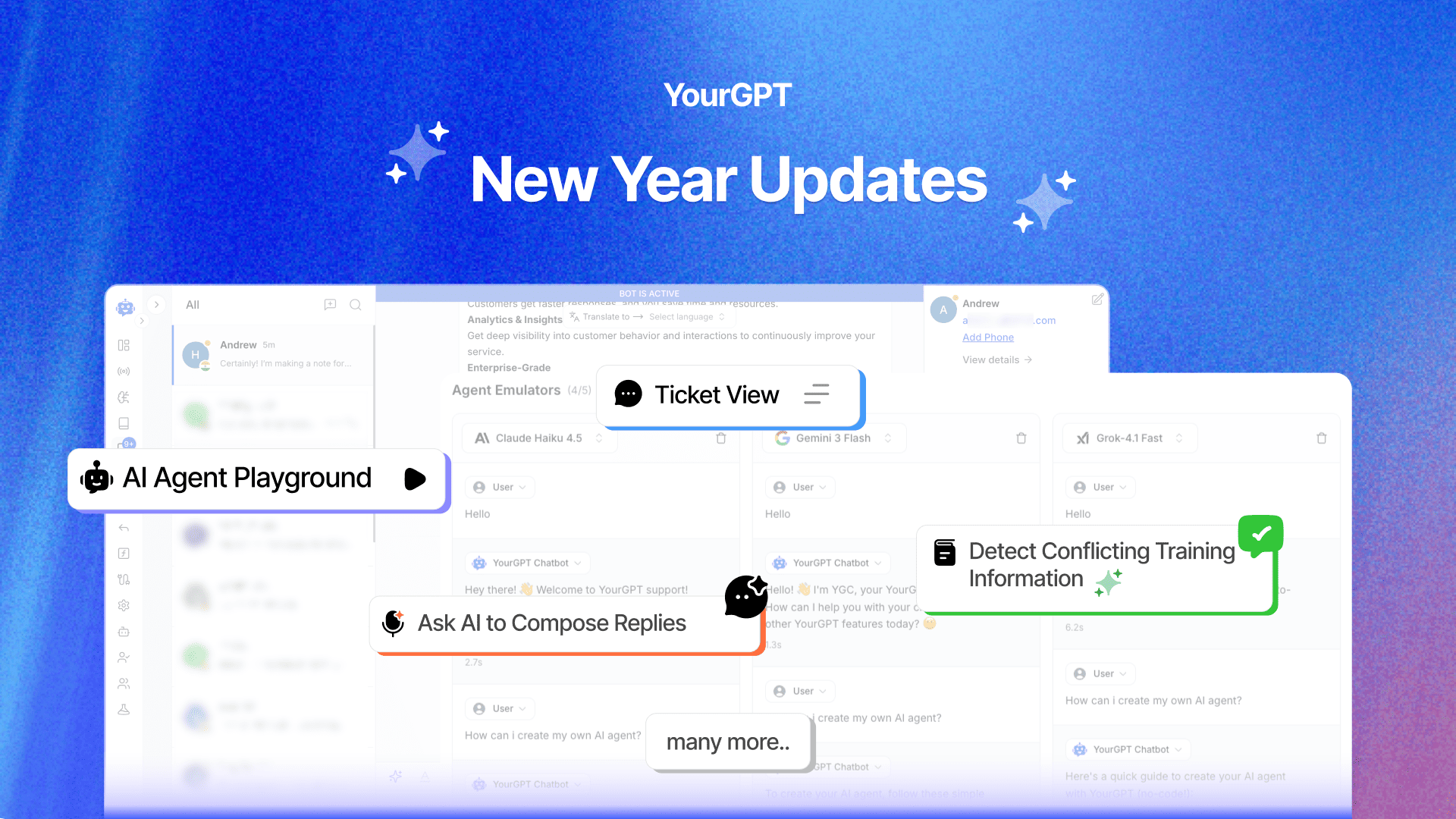

Happy New Year! We hope 2026 brings you closer to everything you’re working toward. Throughout 2025, you’ve seen the platform evolve. We shipped the AI Copilot Builder so your AI could execute actions on both frontend and backend, not just answer questions. We added AI assistance inside Studio to help you generate workflows without starting […]

Grok 4 is xAI’s most advanced large language model, representing a step change from Grok 3. With a 130K+ context window, built-in coding support, and multimodal capabilities, Grok 4 is designed for users who demand both reasoning and performance. If you’re wondering what Grok 4 offers, how it differs from previous versions, and how you […]

OpenAI officially launched GPT-5 on August 7, 2025 during a livestream event, marking one of the most significant AI releases since GPT-4. This unified system combines advanced reasoning capabilities with multimodal processing and introduces a companion family of open-weight models called GPT-OSS. If you are evaluating GPT-5 for your business, comparing it to GPT-4.1, or […]

In 2025, artificial intelligence is a core driver of business growth. Leading companies are using AI to power customer support, automate content, improving operations, and much more. But success with AI doesn’t come from picking the most popular model. It comes from selecting the option that best aligns your business goals and needs. Today, the […]

You’ve seen it on X, heard it on podcasts, maybe even scrolled past a LinkedIn post calling it the future—“Vibe Marketing.” Yes, the term is everywhere. But beneath the noise, there’s a real shift happening. Vibe Marketing is how today’s AI-native teams run fast, test more, and get results without relying on bloated processes or […]