This week in AI | Week 15

This week announcements regarding AI advancements are follows as Meta’s multimodal AI models, Cohere multilingual open language model capabilities, and AI-integrated personal computing. These updates reflect the ongoing progress in artificial intelligence technologies. Let’s examine these developments more closely.

First update from Meta has recently launched its latest development in the generative AI arena, Chameleon. This advanced multimodal model integrates visual and textual information seamlessly, offering promising capabilities in tasks such as image captioning and visual question answering. Unlike traditional models that merge different modalities at later stages, Chameleon integrates these at the very beginning of the process, enhancing its ability to understand and generate mixed-modal content.

Cohere For AI has introduced Aya 23, a multilingual generative large language model covering 23 languages, as part of its ongoing commitment to global AI research inclusivity. The model aims to provide robust support for a variety of languages, significantly expanding access to cutting-edge AI technology worldwide.

Microsoft has launched Copilot+ PCs, integrating advanced AI capabilities directly into personal computing hardware. This move not only enhances the functionality of PCs but also sets a new standard in the personal computing market, positioning Microsoft to take a lead in the AI-driven technology space.

This week, Meta, Cohere AI, and Microsoft shared some interesting updates about their AI technologies. They are working on improving how we interact with technology, supporting more languages, and integrating AI into our daily devices.

We will keep watching for more AI updates in the coming weeks. Stay tuned for more news.

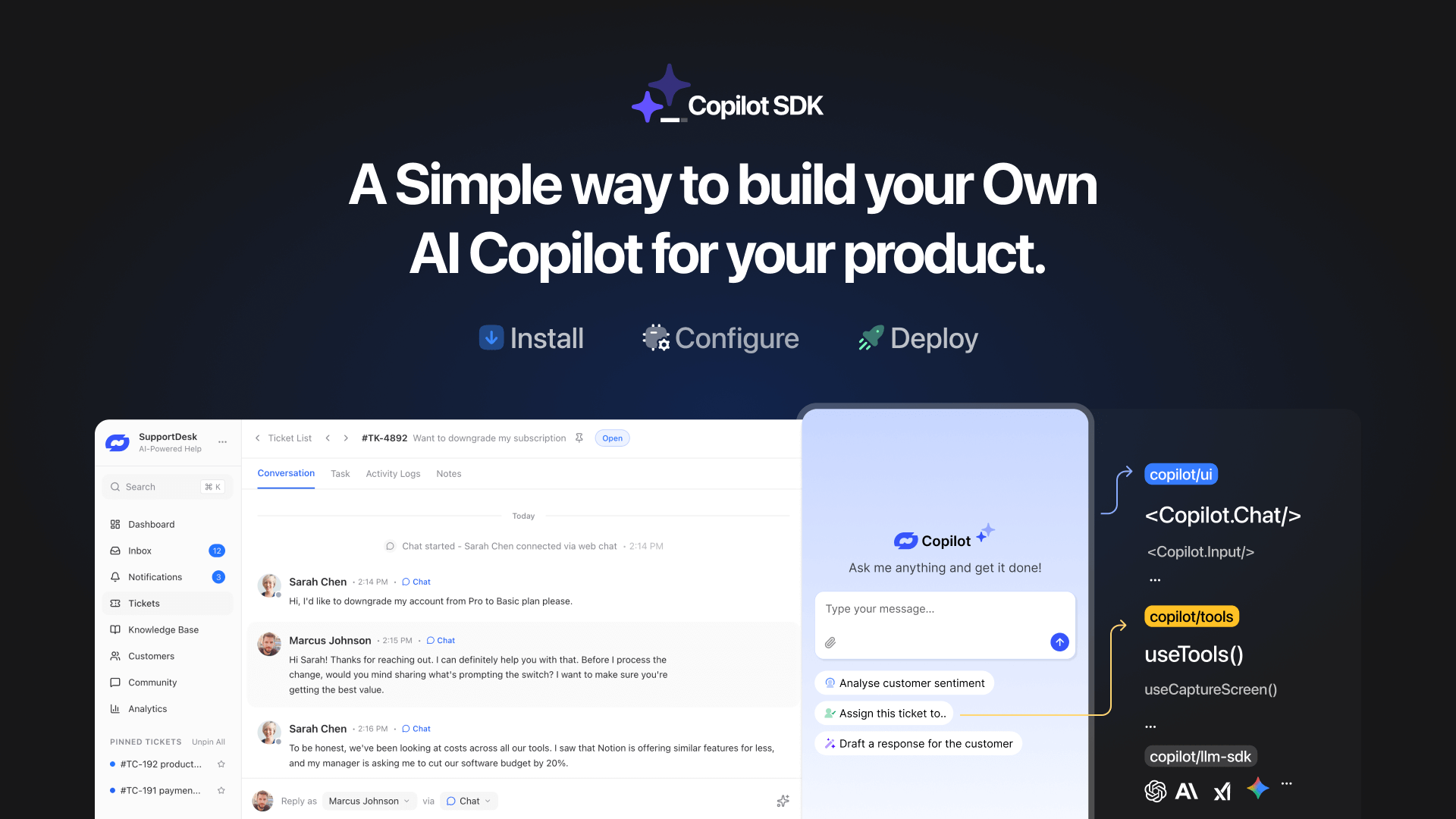

TL;DR YourGPT Copilot SDK is an open-source SDK for building AI agents that understand application state and can take real actions inside your product. Instead of isolated chat widgets, these agents are connected to your product, understand what users are doing, and have full context. This allows teams to build AI that executes tasks directly […]

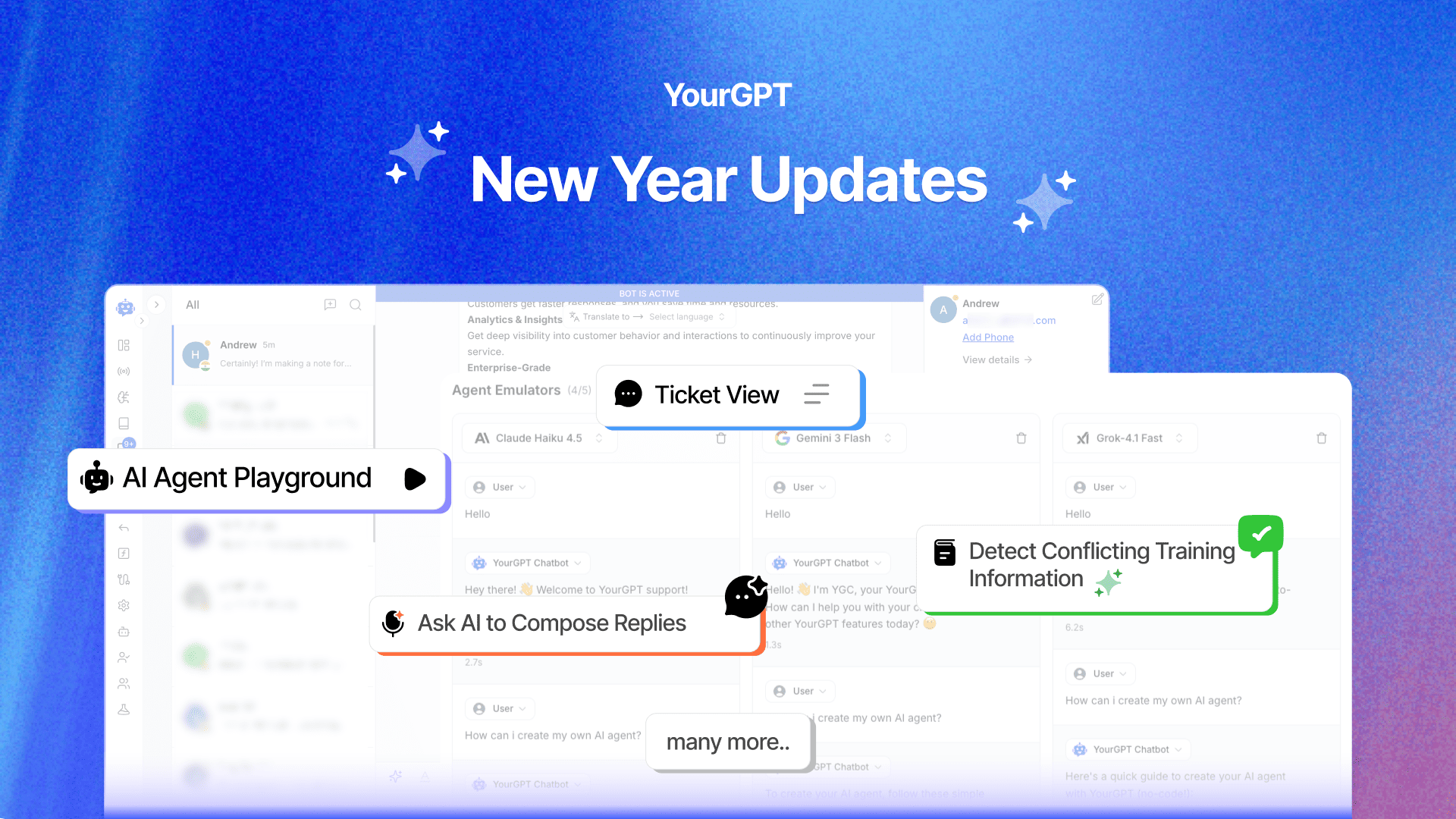

Happy New Year! We hope 2026 brings you closer to everything you’re working toward. Throughout 2025, you’ve seen the platform evolve. We shipped the AI Copilot Builder so your AI could execute actions on both frontend and backend, not just answer questions. We added AI assistance inside Studio to help you generate workflows without starting […]

Grok 4 is xAI’s most advanced large language model, representing a step change from Grok 3. With a 130K+ context window, built-in coding support, and multimodal capabilities, Grok 4 is designed for users who demand both reasoning and performance. If you’re wondering what Grok 4 offers, how it differs from previous versions, and how you […]

OpenAI officially launched GPT-5 on August 7, 2025 during a livestream event, marking one of the most significant AI releases since GPT-4. This unified system combines advanced reasoning capabilities with multimodal processing and introduces a companion family of open-weight models called GPT-OSS. If you are evaluating GPT-5 for your business, comparing it to GPT-4.1, or […]

In 2025, artificial intelligence is a core driver of business growth. Leading companies are using AI to power customer support, automate content, improving operations, and much more. But success with AI doesn’t come from picking the most popular model. It comes from selecting the option that best aligns your business goals and needs. Today, the […]

You’ve seen it on X, heard it on podcasts, maybe even scrolled past a LinkedIn post calling it the future—“Vibe Marketing.” Yes, the term is everywhere. But beneath the noise, there’s a real shift happening. Vibe Marketing is how today’s AI-native teams run fast, test more, and get results without relying on bloated processes or […]