OpenAI DevDay Unveils Exciting Updates and Features

OpenAI DevDay has brought forth a wave of innovative developments and enhancements that promise to reshape the landscape of artificial intelligence and assistive technology. We will cover the amazing announcements made at this event in this blog, including the most recent developments that are going to completely change how developers use artificial intelligence. This is a huge stride towards the future of AI, from the introduction of GPT-4 Turbo with a 128K context window to the Assistants API and cutting-edge modalities including vision, text-to-speech, and more.

GPT-4 Turbo with 128K Context Window: OpenAI introduced the GPT-4 Turbo, the next-generation model following GPT-4. With a 128K context window, GPT-4 Turbo can handle a vast amount of text in a single prompt, making it incredibly versatile for various applications. What’s more, this enhanced model offers a remarkable cost-saving solution, with 3x cheaper input tokens and 2x cheaper output tokens compared to its predecessor.

Function Calling Updates: Function calling capabilities have been improved, enabling developers to describe functions and have the model intelligently output JSON objects containing function arguments. This allows for smoother interactions and the ability to call multiple functions in a single message, streamlining the user experience.

Improved Instruction Following and JSON Mode: GPT-4 Turbo excels at tasks requiring precise instruction following and supports a JSON mode, making it easier to generate syntactically correct JSON output. The response_format parameter lets developers constrain the model’s output to ensure it adheres to a valid JSON structure.

Reproducible Outputs and Log Probabilities: With the new seed parameter, developers can achieve reproducible outputs, ensuring consistent completions. This feature is invaluable for debugging, unit testing, and maintaining control over the model’s behavior. OpenAI plans to introduce log probabilities for the most likely output tokens, useful for features like autocomplete in search experiences.

Updated GPT-3.5 Turbo: In addition to GPT-4 Turbo, OpenAI is launching a new version of GPT-3.5 Turbo with a 16K context window by default. This updated model offers improvements in instruction following, JSON mode, and parallel function calling, with a 38% enhancement in format following tasks like JSON generation.

Assistants API, Retrieval, and Code Interpreter: OpenAI introduces the Assistants API, a powerful tool for developers to create agent-like AI experiences within their applications. Assistants can call models and tools to perform tasks, including the Code Interpreter, Retrieval, and Function Calling. This API offers flexibility for a wide range of use cases, from natural language-based data analysis to voice-controlled applications.

New Modalities in the API: OpenAI expands its AI capabilities with new modalities. GPT-4 Turbo now supports vision, enabling image input in the Chat Completions API. Developers can use this to generate captions, analyze images, or read documents with figures. DALL·E 3 and text-to-speech (TTS) capabilities further enrich the API, providing options for image generation and human-quality speech synthesis.

Model Customization: OpenAI is working on an experimental access program for GPT-4 fine-tuning, offering developers more control over model behavior. Additionally, a Custom Models program is in the works, allowing organizations to collaborate with OpenAI to train custom GPT-4 models tailored to their specific domains.

Lower Prices and Higher Rate Limits: OpenAI is reducing prices across various models, making AI more cost-effective for developers. Higher rate limits for GPT-4 customers and a clear usage tier system ensure scalability and flexibility.

Copyright Shield: OpenAI is introducing Copyright Shield, stepping in to defend customers and cover costs in case of legal claims related to copyright infringement. This safeguard applies to ChatGPT Enterprise and the developer platform, demonstrating OpenAI’s commitment to customer protection.

Whisper v3 and Consistency Decoder: Whisper large-v3 is the latest version of OpenAI’s open-source automatic speech recognition model, offering improved performance across multiple languages. The Consistency Decoder, an open-source replacement for the Stable Diffusion VAE decoder, significantly enhances image quality, especially for text, faces, and straight lines.

Conclusion:

With new innovative changes and features, OpenAI DevDay have completely change the AI space. These developments—from GPT-4 Turbo to the Assistants API, vision capabilities, and more—move us closer to a time when artificial intelligence will be a seamless part of our daily lives, bringing with it increased flexibility, cost-effectiveness, and functionality. As developers explore these new tools and capabilities, we can expect to see a wave of innovative AI-driven applications that will benefit users across various domains.

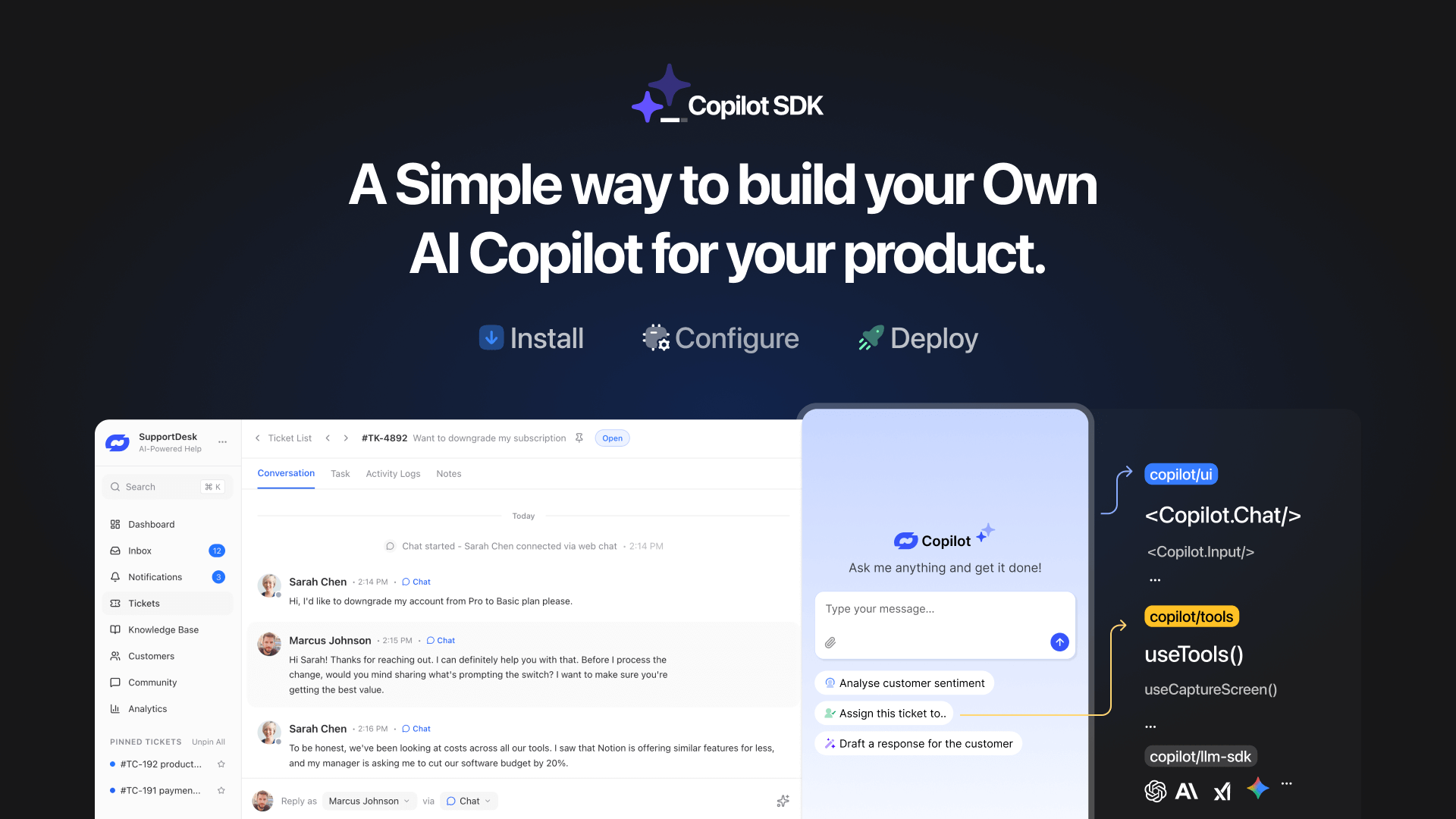

TL;DR YourGPT Copilot SDK is an open-source SDK for building AI agents that understand application state and can take real actions inside your product. Instead of isolated chat widgets, these agents are connected to your product, understand what users are doing, and have full context. This allows teams to build AI that executes tasks directly […]

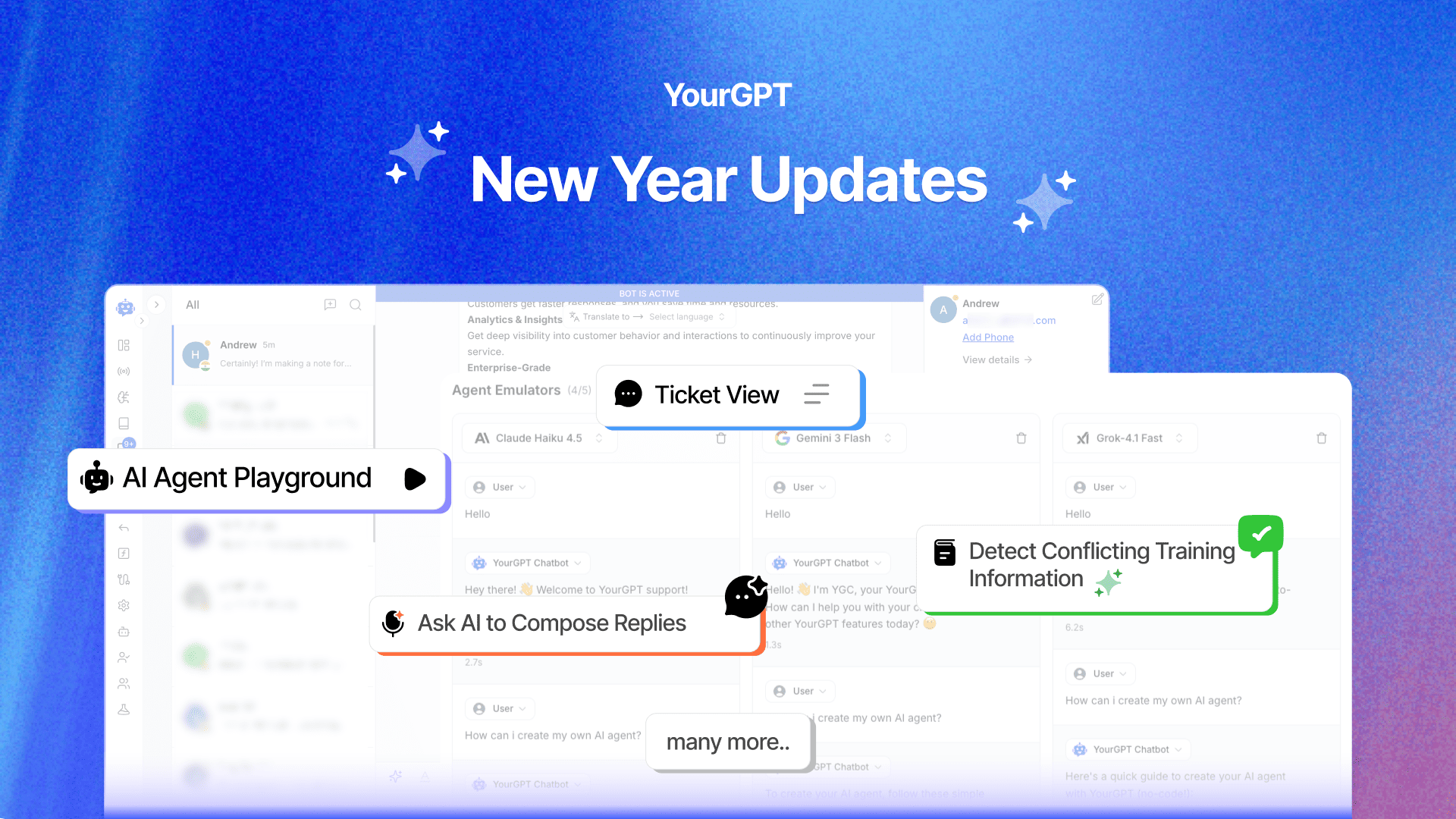

Happy New Year! We hope 2026 brings you closer to everything you’re working toward. Throughout 2025, you’ve seen the platform evolve. We shipped the AI Copilot Builder so your AI could execute actions on both frontend and backend, not just answer questions. We added AI assistance inside Studio to help you generate workflows without starting […]

Grok 4 is xAI’s most advanced large language model, representing a step change from Grok 3. With a 130K+ context window, built-in coding support, and multimodal capabilities, Grok 4 is designed for users who demand both reasoning and performance. If you’re wondering what Grok 4 offers, how it differs from previous versions, and how you […]

OpenAI officially launched GPT-5 on August 7, 2025 during a livestream event, marking one of the most significant AI releases since GPT-4. This unified system combines advanced reasoning capabilities with multimodal processing and introduces a companion family of open-weight models called GPT-OSS. If you are evaluating GPT-5 for your business, comparing it to GPT-4.1, or […]

In 2025, artificial intelligence is a core driver of business growth. Leading companies are using AI to power customer support, automate content, improving operations, and much more. But success with AI doesn’t come from picking the most popular model. It comes from selecting the option that best aligns your business goals and needs. Today, the […]

You’ve seen it on X, heard it on podcasts, maybe even scrolled past a LinkedIn post calling it the future—“Vibe Marketing.” Yes, the term is everywhere. But beneath the noise, there’s a real shift happening. Vibe Marketing is how today’s AI-native teams run fast, test more, and get results without relying on bloated processes or […]