GPT-4: OpenAI’s Groundbreaking Multimodal Model

GPT-4, OpenAI’s latest model, offers improvements in processing both text and image inputs, making it more capable of understanding and generating relevant responses.

Its ability to handle multimodal data is being applied across industries, helping to streamline tasks and improve efficiency. Staying informed about GPT-4’s developments is important for businesses and developers looking to leverage its potential.

GPT-4, short for Generative Pre-trained Transformer 4, is a multimodal large language model (LLM) developed by OpenAI. Launched on March 14, 2023, GPT-4 processes both text and images, making it the first multimodal model in the GPT series. GPT-4: 8,000 tokens context window, 175 billion parameters.

Users can access GPT-4 through the paid ChatGPT Plus, OpenAI’s API, and Microsoft’s free chatbot called Copilot.

This capability allows GPT-4 to understand images, summarize text from screenshots, and even analyze diagrams. It builds on the success of earlier models by employing a transformer-based neural network architecture to generate highly accurate and contextually relevant text.

GPT-4 offers an array of features that make it stand out:

GPT-4 accepts both text and image inputs. For instance, you can upload a scientific diagram and ask it to explain the concepts visually depicted.

The model generates creative outputs like poems, code, scripts, musical compositions, and more. Users can even request GPT-4 to mimic their writing style or create personalized content.

GPT-4 significantly reduces the risk of incorrect or nonsensical outputs, ensuring better reliability for fact-driven tasks.

The model processes up to 25,000 words in a single input, enabling comprehensive document analysis, lengthy conversations, and the creation of long-form content.

GPT-4 supports code generation, translation, and optimization, making it a valuable asset for developers. It can generate HTML, CSS, and JavaScript based on a simple description of a website.

It analyzes large datasets, identifies trends, and provides insights from charts and graphs, making it a robust tool for research, business, and financial modeling.

GPT-4’s neural network architecture mimics human-like understanding. Trained on a vast dataset of text and images, it identifies complex patterns to generate outputs that are logical and contextually accurate.

For instance, GPT-4 can:

These capabilities stem from GPT-4’s advanced pre-training, which leverages billions of parameters. By understanding context, tone, and intent, the model creates precise outputs suited to a variety of industries. For example, businesses use GPT-4 to simplify customer inquiries, while educators rely on it to create customized learning materials.

Additionally, its capacity for image analysis is transformative. It can describe the contents of an image, identify patterns in technical diagrams, and even provide solutions to visual problems, such as troubleshooting technical setups from shared screenshots. These strengths make GPT-4 an adaptable and powerful resource for modern workflows.

Businesses can rely on GPT-4 to draft professional emails, create detailed reports, and design visually compelling presentations.

Educators and students use GPT-4 to summarize complex topics, generate study material, and analyze academic papers.

Developers leverage GPT-4 for coding assistance, debugging, and automating repetitive programming tasks.

Chatbots powered by GPT-4 provide efficient and accurate responses, improving the overall customer experience.

Content creators can produce engaging blogs, social media posts, and video scripts tailored to specific audiences.

| Feature | GPT-4 | GPT-4 Turbo | ChatGPT |

| Model Version | Standard GPT-4 | Optimized version of GPT-4 | Based on GPT-3.5 or GPT-4 (depending on the subscription) |

| Performance | High-level capabilities | Faster and cheaper than GPT-4 | Performance varies based on the plan (free or paid) |

| Speed | Slower compared to Turbo | Faster response time | Varies based on model used (GPT-4 is slower than GPT-3.5) |

| Cost | Higher costs due to performance | Reduced costs due to optimizations | Free version is based on GPT-3.5; GPT-4 is paid |

| Model Access | Available through API and ChatGPT | Available through API and ChatGPT | Free users access GPT-3.5, while GPT-4 is accessible to Plus subscribers |

| Availability | Available via API | Available through API and ChatGPT | Available through web, mobile apps, and API |

| Use Cases | Complex tasks like creative writing, legal, and technical assistance | Same as GPT-4, but with improved efficiency | General-purpose assistant, basic research, and simple queries |

| Resource Consumption | Higher resource usage | More efficient resource usage | Lower resource usage on GPT-3.5, higher on GPT-4 |

| Memory | Limited memory | Same as GPT-4 | Context length and memory depend on model version |

| Context Window | Up to 8,000 tokens (or more in some cases) | Same as GPT-4 | Varies (GPT-3.5 typically supports up to 4,096 tokens; GPT-4 supports more) |

| Modalities | Primarily text-based (supports text generation and understanding) | Primarily text-based (supports text generation and understanding) | Text-based, with potential multimodal support for GPT-4 (images and text) |

| Integration | Available via API | Available via API and ChatGPT | Integrated into OpenAI products (chat, API, etc.) |

| Training Data | Trained on a large corpus of text data, including books, websites, and more | Trained on a large corpus, optimized for efficiency | Trained on a similar corpus, but specific to GPT-3.5 or GPT-4 |

| Fine-Tuning | Available via custom fine-tuning through API | Available via custom fine-tuning through API | No direct fine-tuning, but can be customized through user inputs in ChatGPT |

| Multilingual Support | Strong support for multiple languages | Same as GPT-4 | Available for several languages, with varying accuracy based on the model |

GPT-4 enables businesses to:

This efficiency leads to cost savings and improved productivity, making GPT-4 an invaluable tool for scaling operations.

While GPT-4 offers significant advantages, challenges include:

Addressing these challenges requires thoughtful implementation and policies.

OpenAI has released its latest large language model, GPT-4. This multimodal model can process both image and text inputs and generate text outputs.

GPT-4’s ability to handle multimodal inputs, generate accurate outputs, and improve processes makes it a notable advancement in AI. By understanding its strengths and limitations, businesses and developers can make the most of its potential for value and innovation.

Try GPT-4 now and experience it for yourself!

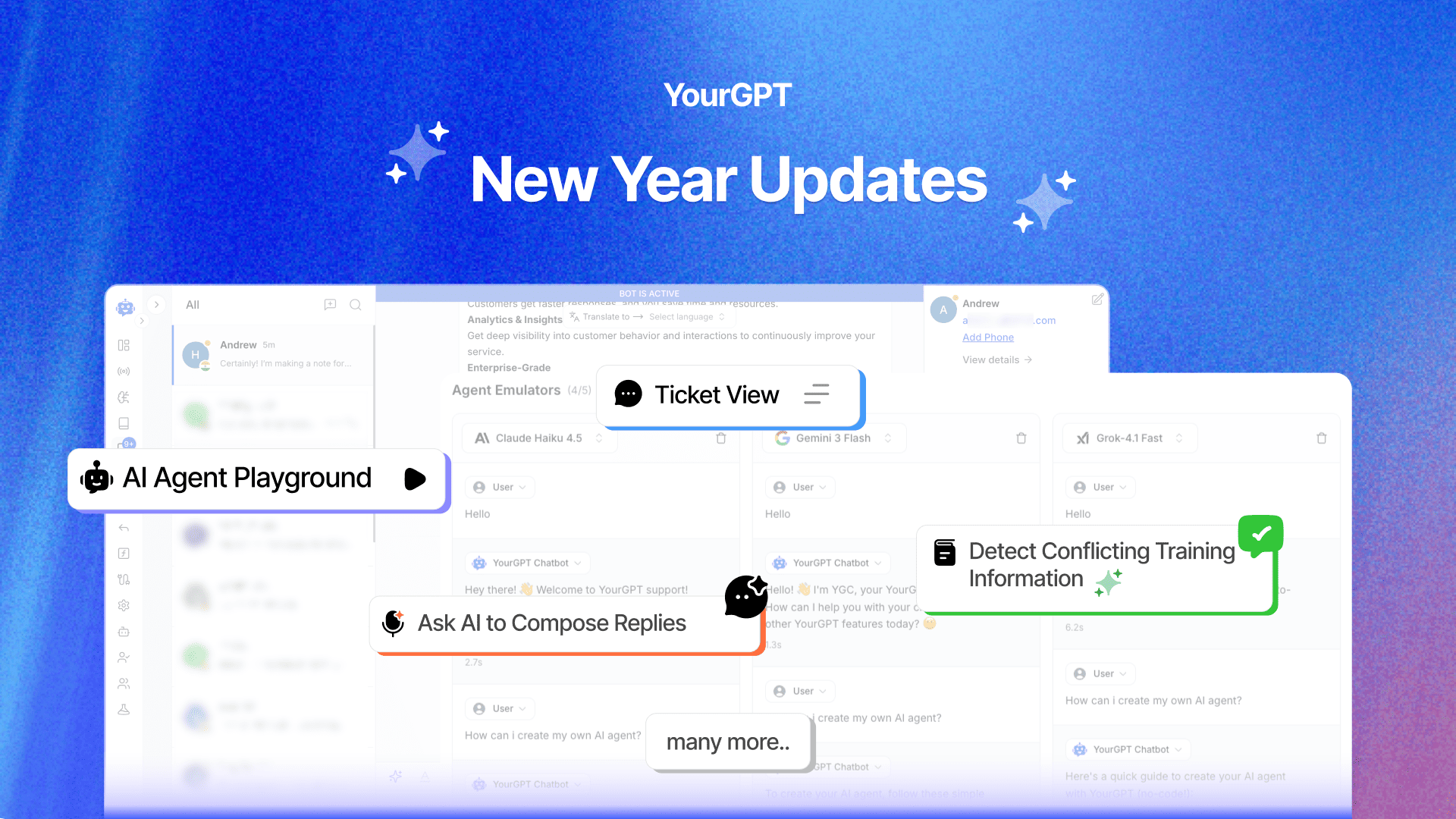

Happy New Year! We hope 2026 brings you closer to everything you’re working toward. Throughout 2025, you’ve seen the platform evolve. We shipped the AI Copilot Builder so your AI could execute actions on both frontend and backend, not just answer questions. We added AI assistance inside Studio to help you generate workflows without starting […]

Grok 4 is xAI’s most advanced large language model, representing a step change from Grok 3. With a 130K+ context window, built-in coding support, and multimodal capabilities, Grok 4 is designed for users who demand both reasoning and performance. If you’re wondering what Grok 4 offers, how it differs from previous versions, and how you […]

OpenAI officially launched GPT-5 on August 7, 2025 during a livestream event, marking one of the most significant AI releases since GPT-4. This unified system combines advanced reasoning capabilities with multimodal processing and introduces a companion family of open-weight models called GPT-OSS. If you are evaluating GPT-5 for your business, comparing it to GPT-4.1, or […]

In 2025, artificial intelligence is a core driver of business growth. Leading companies are using AI to power customer support, automate content, improving operations, and much more. But success with AI doesn’t come from picking the most popular model. It comes from selecting the option that best aligns your business goals and needs. Today, the […]

You’ve seen it on X, heard it on podcasts, maybe even scrolled past a LinkedIn post calling it the future—“Vibe Marketing.” Yes, the term is everywhere. But beneath the noise, there’s a real shift happening. Vibe Marketing is how today’s AI-native teams run fast, test more, and get results without relying on bloated processes or […]

You describe what you want. The AI builds it for you. No syntax, no setup, no code. That’s how modern software is getting built in 2025. For decades, building software meant writing code and hiring developers. But AI is changing that fast. Today, anyone—regardless of technical background—can build powerful tools just by giving clear instructions. […]